Apple addresses concerns with CSAM detection, says any expansion will occur on a per-country basis

- Apple has addressed concerns with CSAM detection.

- Security researchers are concerned that the government could use CSAM detection for nefarious purposes.

- Apple addressed the possibility of a corrupt safety organization.

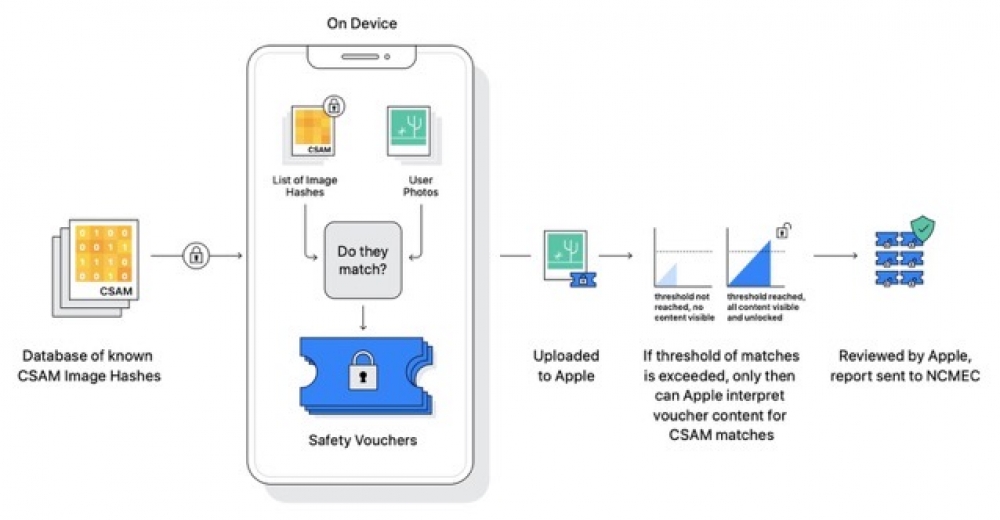

Apple announced earlier this week that starting later this year with iOS 15 and iPadOS 15 the company will be able to detect known child sexual abuse material (CSAM) images stored in iCloud photos. Apple says that this will enable them to report these instances to the National Center for Missing and Exploited Children, which is a nonprofit organization that works in collaboration with law enforcement agencies across the United States.

Obviously, this has sparked some major concerns with security researchers and other parties about the possibility that Apple could eventually be forced by the government to add non-CSAM images to the hash list. Researchers say that the government can use this to their advantage for nefarious purposes, such as suppressing political activism.

The nonprofit Electronic Frontier Foundation has also criticized Apple‘s plans, stating that "even a thoroughly documented, carefully thought out, and narrowly-scoped back door is still a back door."

Apple has addressed these concerns by providing additional commentary about its plans. Apple says that the CSAM detection system will be limited to the United States at launch, and that they are also aware of the potential for some governments to try and abuse the system. Apple confirmed to MacRumors that the company will consider any potential global expansion of the system on a country-by-country bases after concluding a thorough legal evaluation.

Apple has addressed these concerns by providing additional commentary about its plans. Apple says that the CSAM detection system will be limited to the United States at launch, and that they are also aware of the potential for some governments to try and abuse the system. Apple confirmed to MacRumors that the company will consider any potential global expansion of the system on a country-by-country bases after concluding a thorough legal evaluation.

Recommended by the editors:

Thank you for visiting Apple Scoop! As a dedicated independent news organization, we strive to deliver the latest updates and in-depth journalism on everything Apple. Have insights or thoughts to share? Drop a comment below—our team actively engages with and responds to our community. Return to the home page.Published to Apple Scoop on 6th August, 2021.

No password required

A confirmation request will be delivered to the email address you provide. Once confirmed, your comment will be published. It's as simple as two clicks.

Your email address will not be published publicly. Additionally, we will not send you marketing emails unless you opt-in.